Multilateration for Tracking of Airplanes

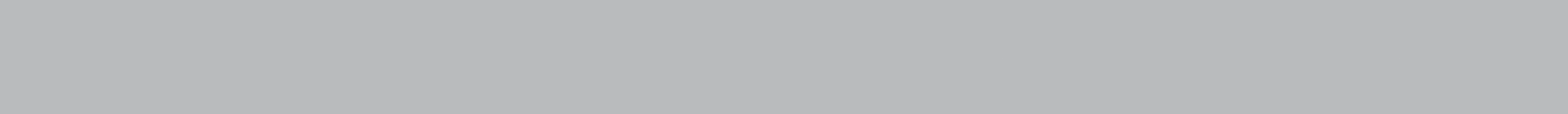

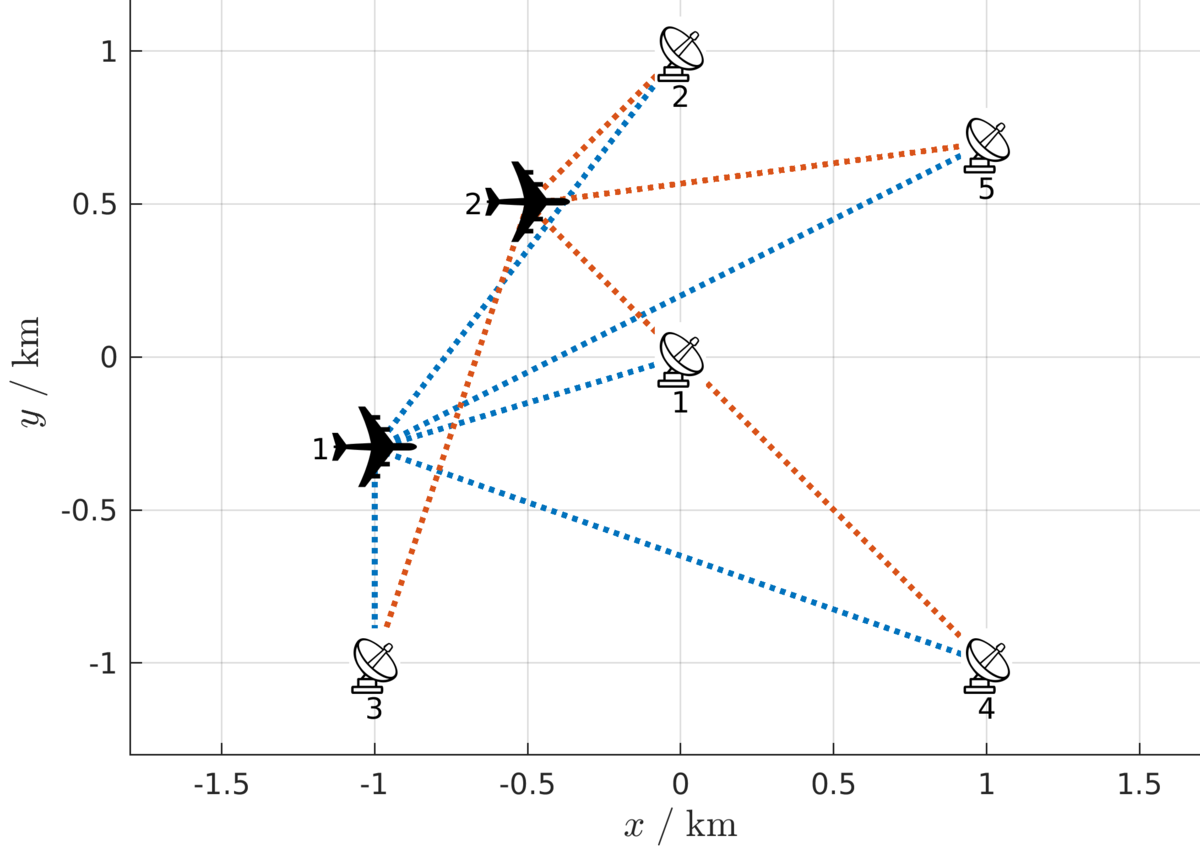

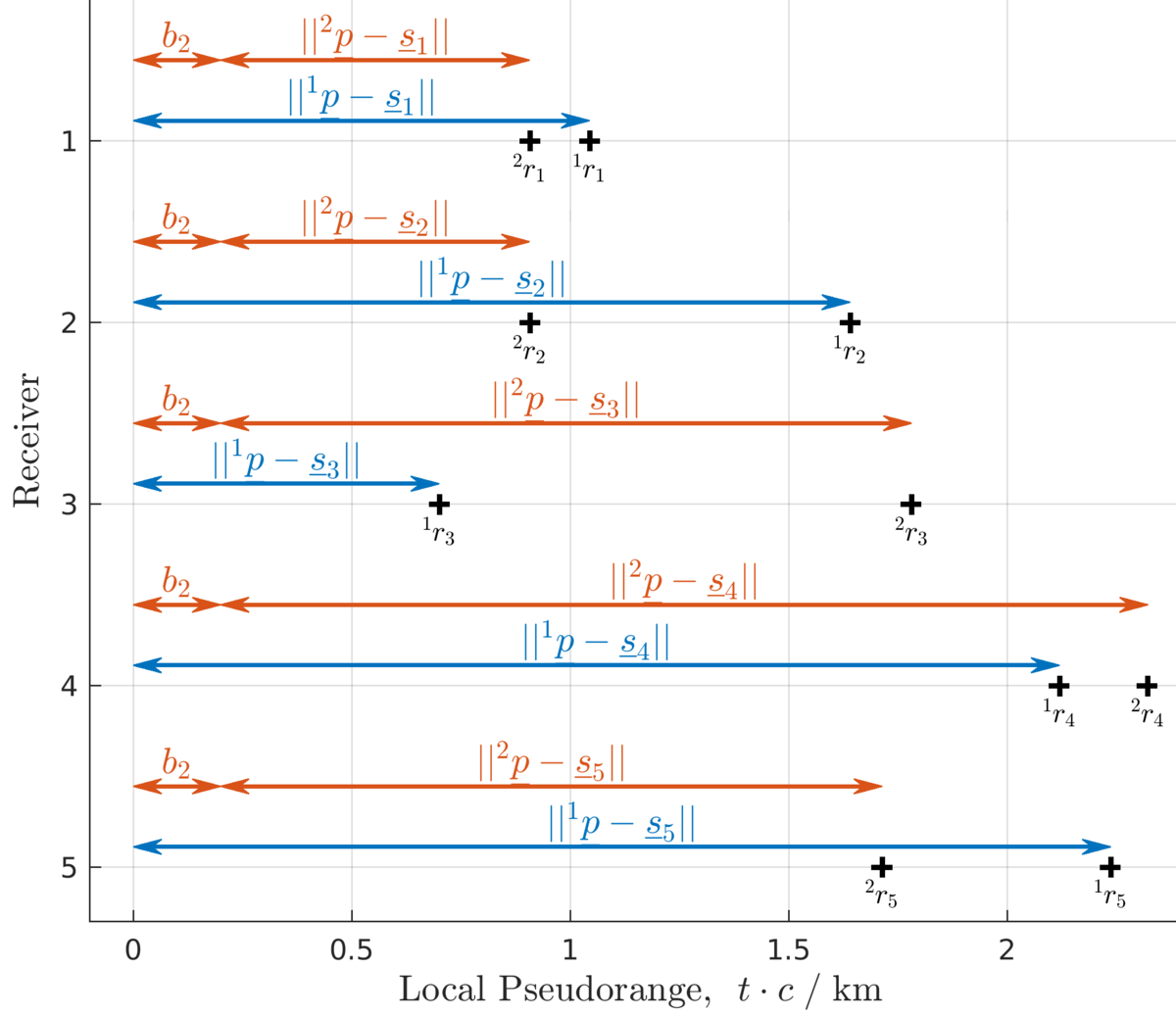

Multilateration is a technique to estimate the position of a transmitter of propagating waves based on measurements taken from sensors at different distant positions. It is applied in radar, sonar, and radio astronomy, for example [TDOA_FKIE]. We set up the measurement equation that calculates the time of arrival (TOA) of the signal at a given sensor based on its distance to the source and the propagation velocity. When enough measurements are available, only one aircraft position can reproduce the encountered TOA. Sometimes we work with time differences of arrival (TDOA) which can be calculated via cross correlation of the sampled input signal. Current research at our institute covers multi target tracking [SPIE17_Hanebeck], synchronization-free localization [Fusion19_Frisch], and multi sensor data fusion [Fusion18_Radtke].

We estimate aircraft positions in datasets from our cooperation partner Frequentis Comsoft in Durlach. We develop advanced techniques to localize aircraft also in cases where it is problematic at present, like sensors with non-synchronized clocks, for example due to GPS breakdown, and when more than one aircraft send indistinguishable messages.

At ISAS, we also apply multilateration to localize sound sources in experiments at the institute. With multiple directional sensors, we want to bridge multilateration and directional statistics [Fusion18_Li-SE2]. With new distributed information fusion algorithms, we want to merge directional measurements from multiple sensor nodes [MFI19_Radtke].

Contact: Daniel Frisch

Motion Compression in Virtual Reality

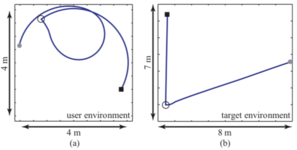

Our research in Virtual Reality focuses on navigation interfaces using natural locomotion, i.e., with a user’s own motions instead of using a mouse or a keyboard. The idea is navigate the virtual world by walking in a real environment (RE), and then translating that motion into the virtual environment (VE). However, the VE is generally much larger than the available walking space, raising the need for mechanisms to somehow transform the walking motions to allow users to reach the entire virtual map.

A framework to achieve this is motion compression [SPIE03], which is divided in three components. First, path prediction employs heuristics to determine which path a user is most likely to take, using for example techniques from intention recognition [SMC04_Roessler] and control theory [MECHROB04_Roessler]. Second, path compression transforms the predicted path to make it fit within the RE, while retaining traversed distances and turn angles. A way to achieve this is by estimating the compression with the minimal amount of curvature changes, which can be solved by describing the task as an optimization problem. Finally, user guidance applies transformations on the camera pose to guide the user towards the compressed path in the RE, while giving them the impression of moving through the predicted pain in the VE. This is achieved, for example, by continuously rotating the camera in small amounts, exploiting the fact that vision overrides proprioceptive inputs, i.e., we trust what we see more than the motions we feel.

Motion Compression can be used in many disciplines, ranging from extended range telepresence [ICINCO09_Perez] to pedestrian modeling [RB12_Kretz] and robotic teleoperators [IROS05_Roessler]. An important application is in the context of the ROBDEKON project, which develops solutions for the decontamination of regions polluted with chemical or radioactive waste, which can only be explored by remote agents such as robots and drones.

Contact:

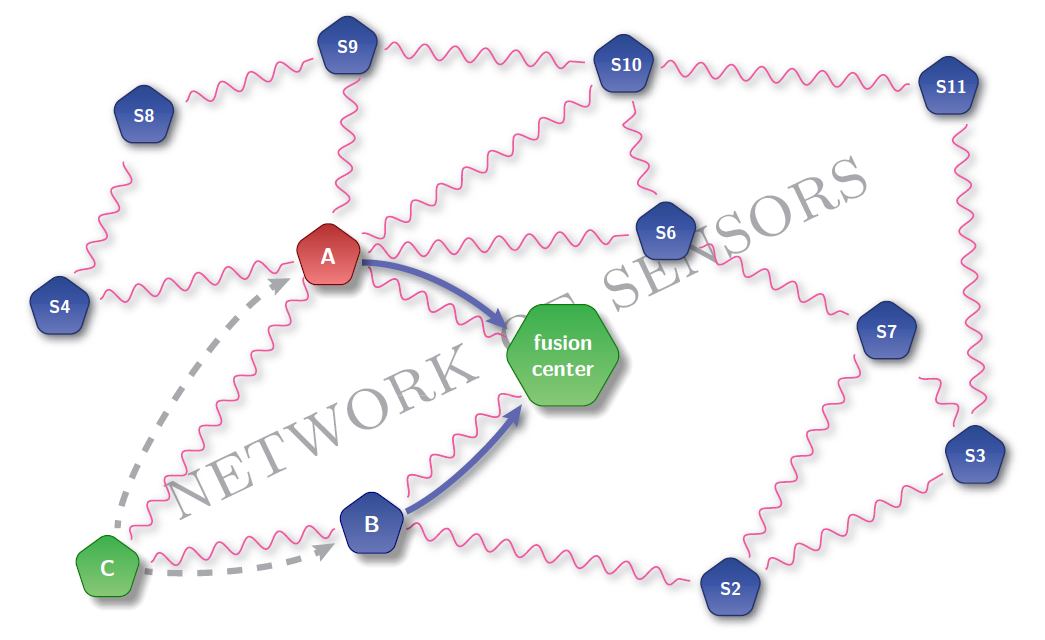

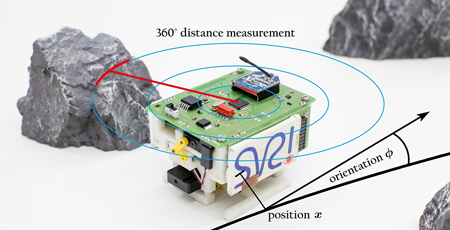

Information Processing in Networks

The amount of sensor data provided by technical systems is steadily increasing. In the near future, the number of systems will rise again drastically when low-power, widely distributed devices can affordably be connected to low-power wide-area networks. The model-based techniques for sensor-actuator-networks developed at ISAS allow to reconstruct and identify complex distributed phenomena (like the pollution concentration) using just a small number of measurements [IFAC14_Noack]. By systematically treating the appearing uncertainties, the information gain of future measurements is predictable. Through this, optimal sensor scheduling with respect to high measurement accuracy and low needs in energy consumption and communication is possible [ACC18_Rosenthal].

A further aspect in reducing the communication and computation costs as well as in efficiently applying sensor-actuator-networks concerns with the decentralized processing, i.e., the distributed execution over all nodes of the developed algorithms. Here, the consideration of stochastic dependencies is very challenging [CRC15_Noack]. Robust methods that explicitly model these dependencies are currently investigated at ISAS. In particular, methods that are tailored to the problem of common information are studied [Automatica17_Noack].

For optimal data fusion, we have proposed novel techniques to reconstruct cross-correlations between sensor node estimates by keeping a limited set of deterministic samples [Fusion18_Radtke]. For this purpose, each node computes a set of deterministic samples that provides all the information required to reassemble the cross-covariance matrix for each pair of estimates. As the number of samples is increasing over time, methods to reduce the size of the sample set are developed and studied.

Contact: Florian Pfaff

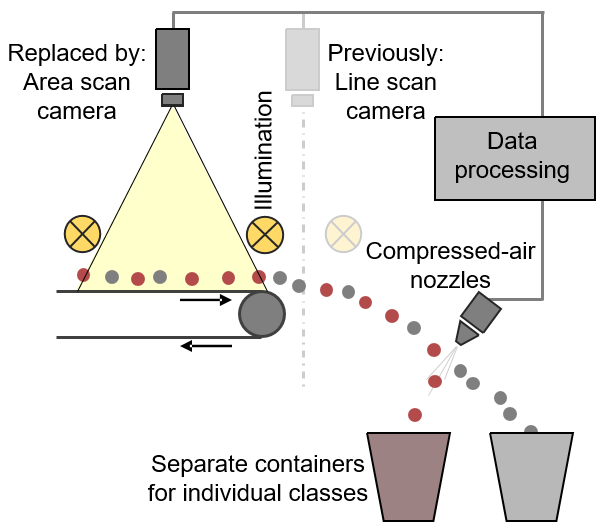

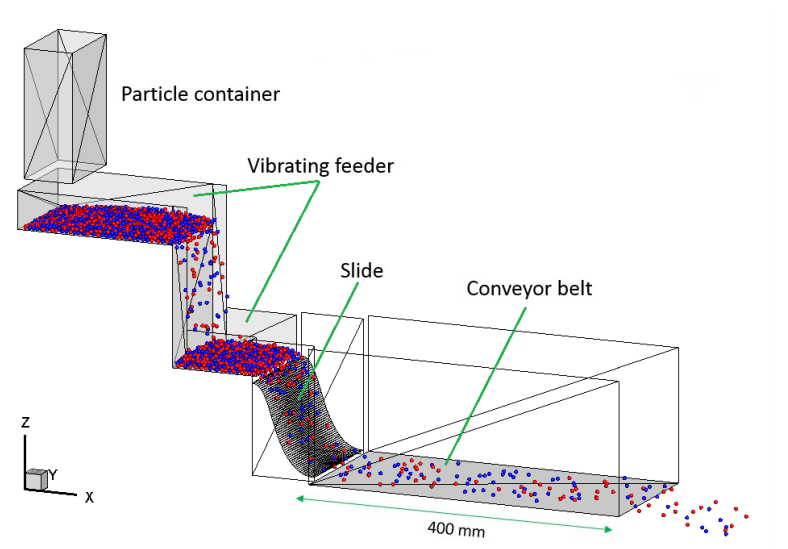

Multitarget Tracking for Optical Belt Sorting

In optical belt sorting, the stream of bulk materials is separated into two parts by targeting certain particles in-fight using bursts of compressed air. In industrial applications, optical belt sorters perform the separation based on a single observation of the particle caputred by a line scan camera. As this requires strict assumptions about the motion behavior, the selection or timing of the nozzle can be incorrect. In our IGF-funded project "Inside Schüttgut", we have integrated multitarget tracking techniques into optical belt sorting via the use of an area scan camera [MFI15_Pfaff, TM16_Pfaff] to improve the nozzle control.

The multitarget tracking application in the bulk material sorting task is nontrivial as we cannot reply on the image processing to determine which measurements stem from the same particle. To ensure the success of the tracking, accurate motion models had to be defined and the tracking needed to be tailored to the problem at hand. Further scenario-specific knowledge can be derived and improved include the average velocity at the beginning of the belt and the likelihood that a measurement has newly arrived at the belt or left the belt.

Experiments have been performed on simulated data generated using the discrete element method [PowTec16_Pieper, MFI16_Pfaff] and image data obtained on a real optical belt sorter [MFI15_Pfaff] and showed significant improvements. Aside improving the separation results, we were also able to tell particles of different classes apart based on the motion behavior on the belt [OCM17_Maier, TM17_Maier]. Current research focuses on further improving the reliability of the tracking, i.e., by using the orietation [MFI17_Pfaff] of the particles or other features that can be extracted from the visual data. Further, ongoing research includes the derivation of new motion models and determining more characteristics about the particle behavior based on the observed motion behavior.

Contact: Marcel Reith-Braun, Florian Pfaff

Directional Estimation for Robotic Perception and Pose Tracking

Left: A directional estimation-based visual SLAM system using stereo cameras with tracking and mapping results tested on the KITTI dataset.

Middle and right: An example of the Bingham distribution on S2 with changing parameter matrix Z and M.

Directional estimation comprises recursive estimation algorithms on directional manifolds, e.g., unit circle, unit hypersphere, and torus, etc. The approach is achieved by applying directional statistics [DS_Mardia] to stochastically model uncertian directional variables directly on their periodic and nonlinear manifold, and proposing corresponding filtering algorithms.

At ISAS, we have successfully proposed a series of directional estimators for filtering of angular data [CDC14_Kurz], orientations [TAC16_Gilitschenski], etc. In DFG-funded project "Recursive Estimation of Rigid Body Motions", we specifically study robust and accurate directional estimation algorithms on SO(2), SO(3), SE(2), and SE(3), which are the group of planar rotations (2 DoF), spatial rotations (3 DoF), planar transformations (3 DoF) and the most general spatial transformations (6 DoF), respectively. Unlike conventional stochastic estimators, which typically rely on the local linearization, our approaches are targeted to give: (1) better consideration for the nonlinear structure of the underlying manifold, (2) direct probabilistic interpretation of the correlation between translation and rotation terms [Fusion18_Li-SE2], (3) efficient and robust pose tracking and perception given noisy measurements [Fusion18_Li-SLAM] despite fast and violent motions.

The directional approaches for estimation of rigid body motions can be used in many scenarios, among which the Simultaneous Localization And Mapping (SLAM) and pose tracking in sensor networks are our central interests. These fundamental applications can be further extended to 3-D reconstruction, multilateration, autonomous driving, and robotic manipulation as well as navigation. In this case, the directional estimation methods are essentially combined with other techniques, e.g., progressive filtering, pose interpolation and extrapolation, mapping, pose graph optimization, distributed estimation, and sensor fusion, etc.

Contact: Florian Pfaff

Stochastic Control

In Model Predictive Control (MPC) not only the current system state of a technical system (e.g., a mobile robot), is considered in the control task, but also a prediction of the system behavior. This leads to a significant improvement in the quality of control since the influence of the applied control inputs on the system behavior is incorporated. The stochastic methods developed at ISAS [ECC16_Chlebek, CDC16_Dolgov, ACC18_Dolgov] are especially well-suited for nonlinear systems that are heavily noise-influenced as they explicitly consider these aspects in the control. This is accomplished by integrating the state estimation techniques developed at ISAS.

Currently, one research focus is on applying these techniques to Networked Control Systems (NCS), where the components of the control loop communicate over general purpose networks. Here, the network imperfections (e.g., delays and packet losses) must be considered in addition to the control task, so that specialized control and estimation methods are required that explicitly consider the underlying network [ACC13_Fischer, ACC15_Dolgov, MFI17_Rosenthal, LNEE18_Rosenthal]. Such control applications naturally arise in Cyber-Physical Systems (CPS) in both industry and home environments, whose number has been steadily increasing in the past years. In the project CoCPN: Cooperative Cyber Physical Networking we aim at developing approaches for a more integrated operation of control and communication systems, together with the Institute of Telematics (Prof. Zitterbart).

Contact: Markus Walker, Marcel Reith-Braun